Example studies

Small example

Config file: config/config.json.

Command:

snakemake --cores all --use-singularity --configfile config/config.json

Approximate time: 10 min. on a 3.1 GHz Dual-Core Intel Core i5.

This study is an example of data scenario V) Fully generated based on three continuous datasets, sampled using the iid module, corresponding to three realisations of a random linear Gaussian structural equation model (SEM) with random DAG.

The DAGs are sampled from a restricted Erdős–Rényi model using the pcalg_randdag module and the weight parameters are sampled uniformly using the sem_params module.

For simplicity, we use only a few structure learning modules here (bidag_itsearch, tetrad_fges, bnlearn_tabu, pcalg_pc ) with different parameter settings.

The benchmark_setup section of this study is found in Listing 1.

1"benchmark_setup": {

2 "data": [

3 {

4 "graph_id": "avneigs4_p20",

5 "parameters_id": "SEM",

6 "data_id": "standardized",

7 "seed_range": [1, 3]

8 }

9 ],

10 "evaluation": {

11 "benchmarks": {

12 "filename_prefix": "example/",

13 "show_seed": false,

14 "errorbar": true,

15 "errorbarh": false,

16 "scatter": true,

17 "path": true,

18 "text": false,

19 "ids": [

20 "fges-sem-bic",

21 "tabu-bge",

22 "itsearch-bge",

23 "pc-gaussCItest"

24 ]

25 },

26 "graph_true_plots": true,

27 "graph_true_stats": true,

28 "ggally_ggpairs": true,

29 "graph_plots": [

30 "fges-sem-bic",

31 "tabu-bge",

32 "itsearch-bge",

33 "pc-gaussCItest"

34 ],

35 "mcmc_traj_plots": [],

36 "mcmc_heatmaps": [],

37 "mcmc_autocorr_plots": []

38 }

39 }

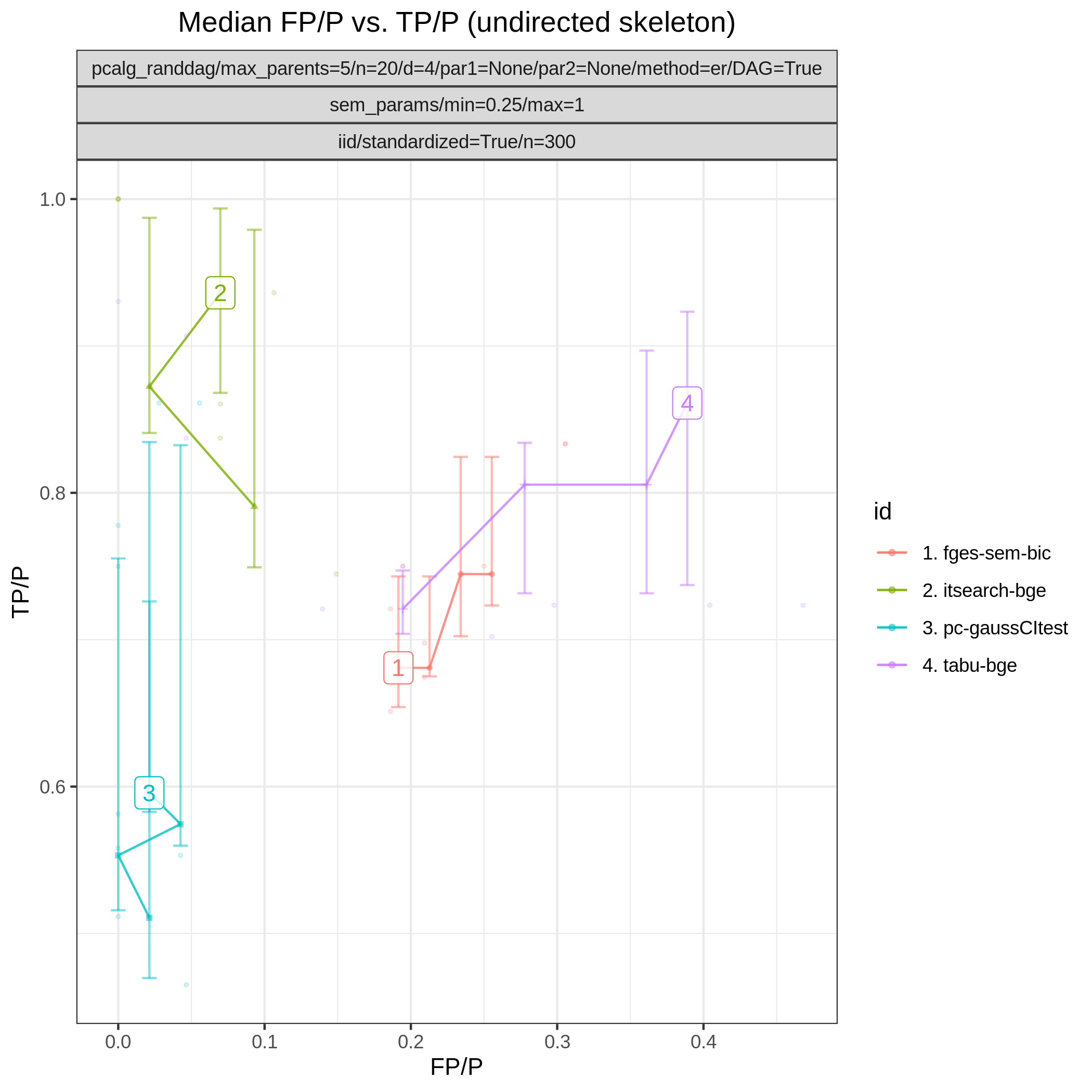

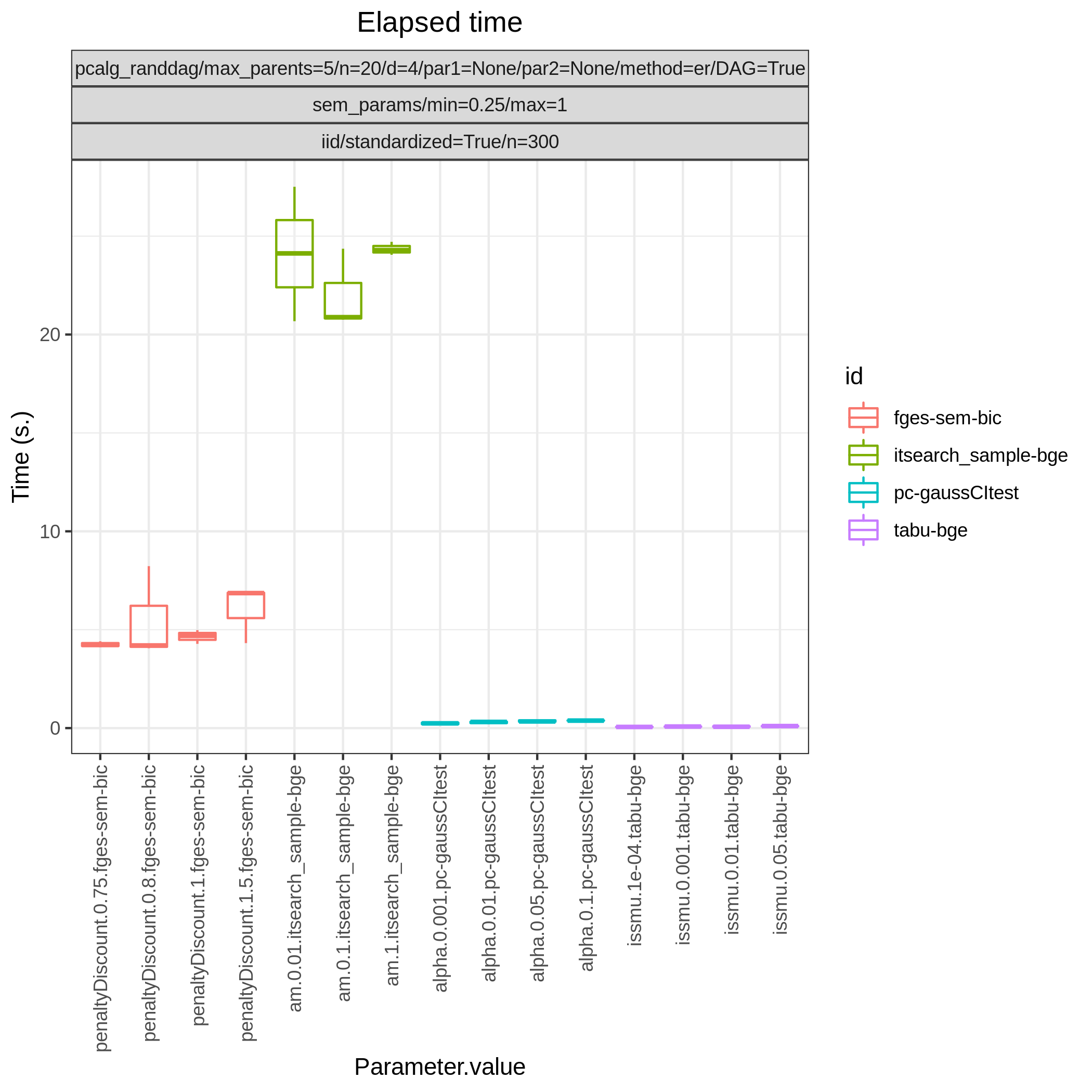

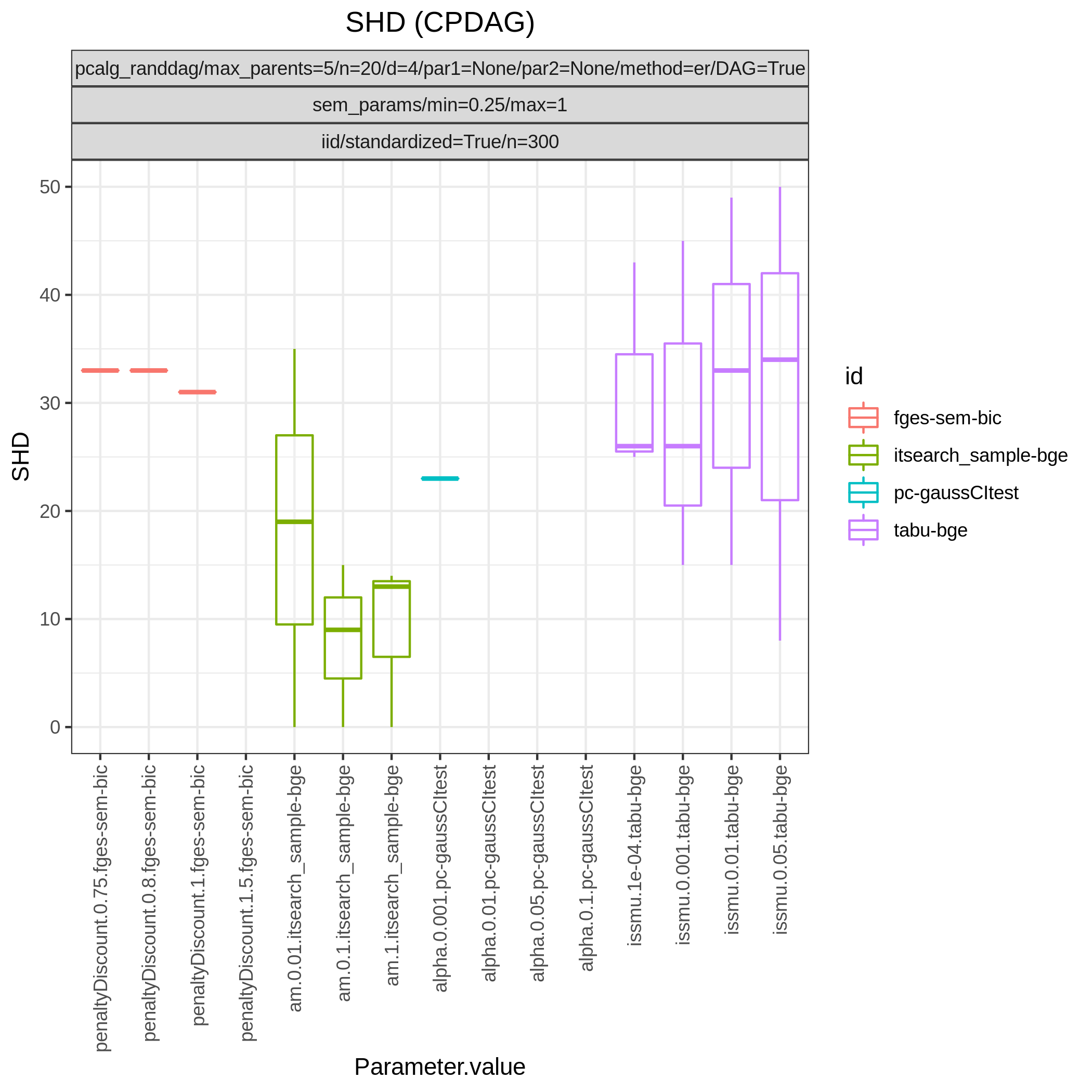

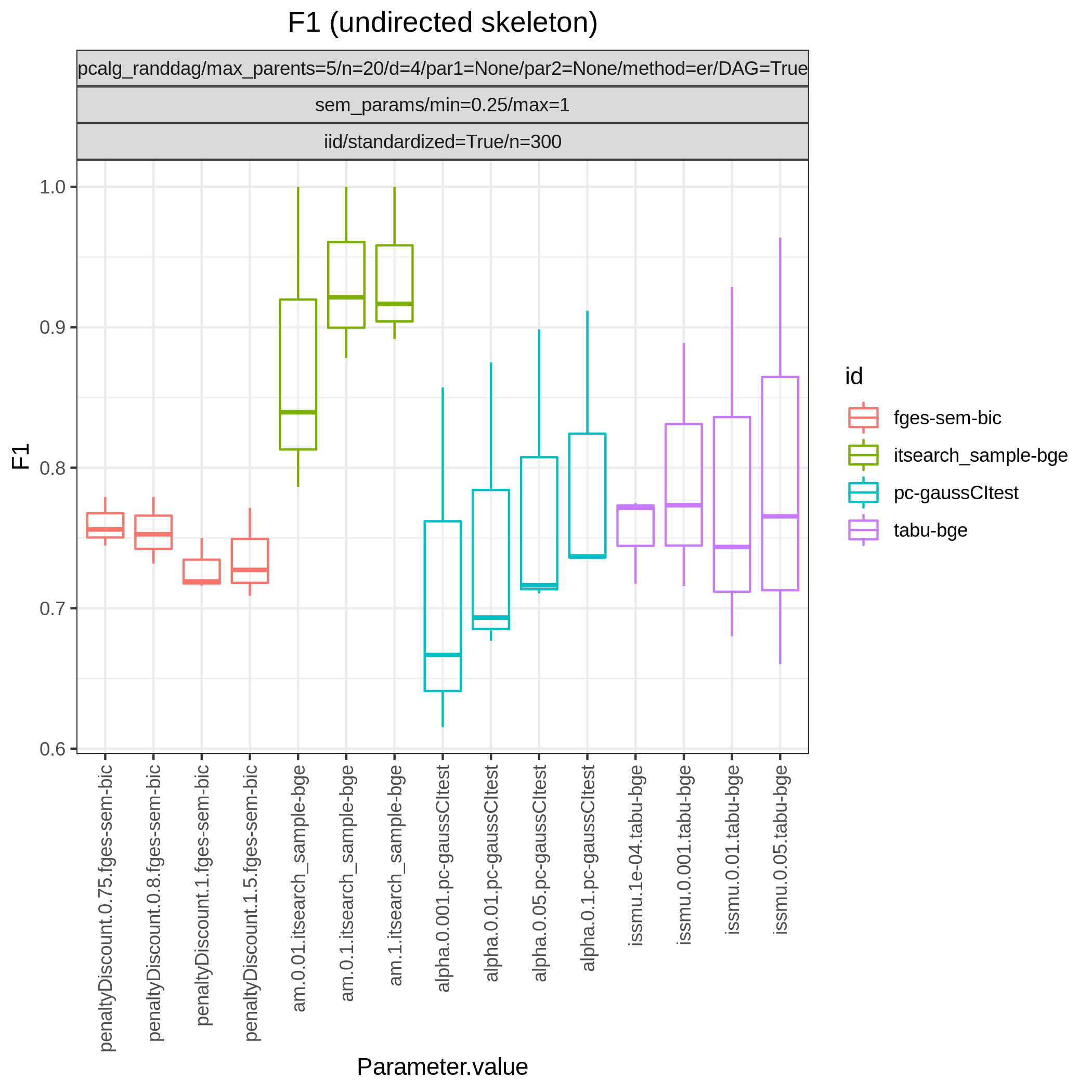

The following plots are generated by the benchmarks module

From graph_true_plots and graph_plots we get

PC vs. dual PC

Config file: config/paper_pc_vs_dualpc.json.

Command:

snakemake --cores all --use-singularity --configfile config/paper_pc_vs_dualpc.json

Approximate time: 20 min.

This is a small scale simulation study from data scenario V) Fully generated where the PC (pcalg_pc) and the dual PC (dualpc) algorithms are compared side-by-side.

We consider data from 10 random Bayesian network models \(\{(G_i,\Theta_i)\}_{i=1}^{10}\), where each graph \(G_i\) has \(p=80`\) nodes and is sampled using the pcalg_randdag module.

The parameters \(\Theta_i\) are sampled from the random linear Gaussian SEM using the sem_params module with min =0.25, max =1.

We draw one standardised dataset \(\mathbf Y_i\) of size \(n=300\) from each of the models using the iid, module.

The benchmark_setup section of this study is found in Listing 2.

1"benchmark_setup": {

2 "data": [

3 {

4 "graph_id": "avneigs4_p80",

5 "parameters_id": "SEM",

6 "data_id": "standardized",

7 "seed_range": [1, 10]

8 }

9 ],

10 "evaluation": {

11 "benchmarks": {

12 "filename_prefix": "paper_pc_vs_dualpc/",

13 "show_seed": true,

14 "errorbar": true,

15 "errorbarh": false,

16 "scatter": true,

17 "path": true,

18 "text": false,

19 "ids": [

20 "pc-gaussCItest",

21 "dualpc"

22 ]

23 },

24 "graph_true_plots": true,

25 "graph_true_stats": true,

26 "ggally_ggpairs": false,

27 "graph_plots": [

28 "pc-gaussCItest",

29 "dualpc"

30 ],

31 "mcmc_traj_plots": [],

32 "mcmc_heatmaps": [],

33 "mcmc_autocorr_plots": []

34 }

35}

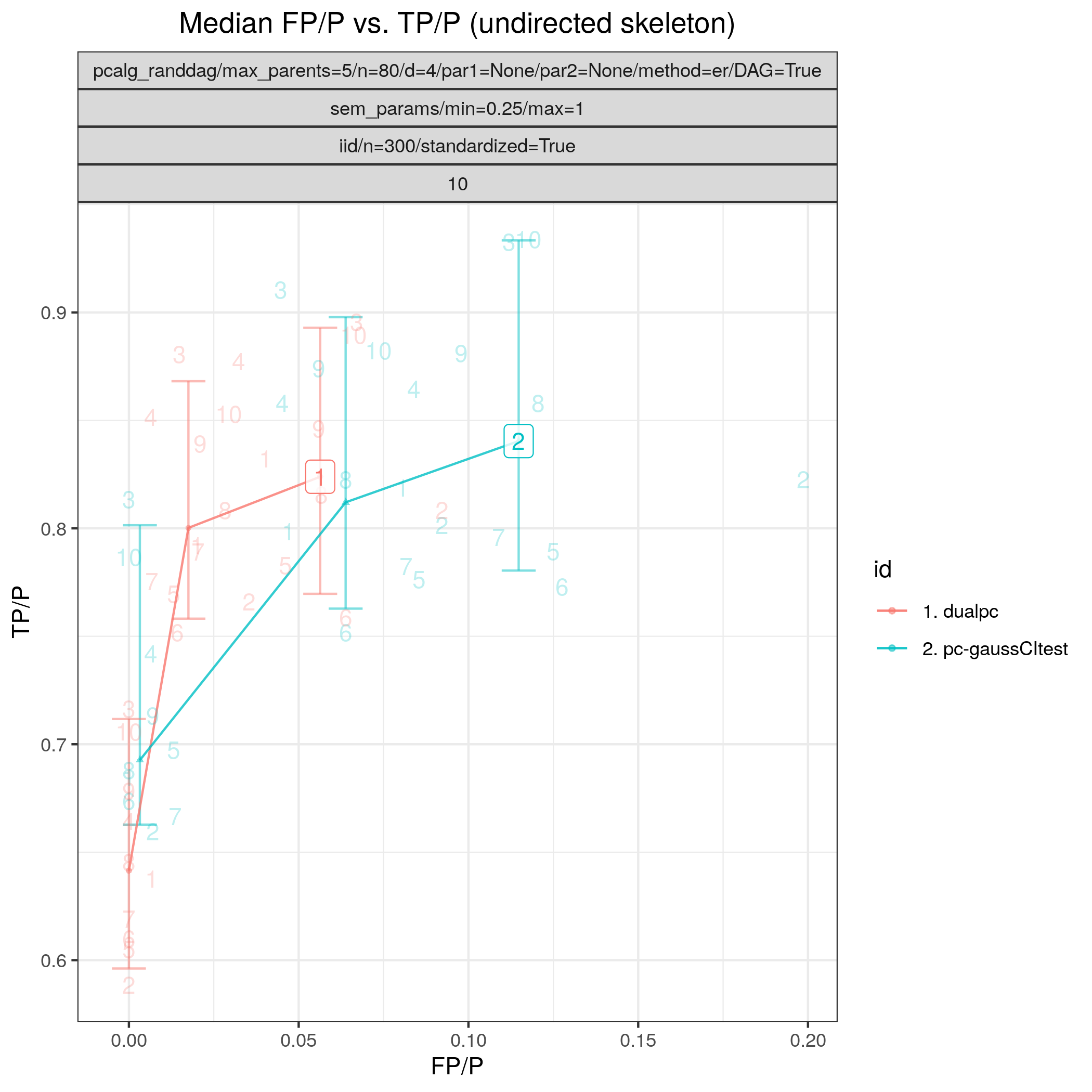

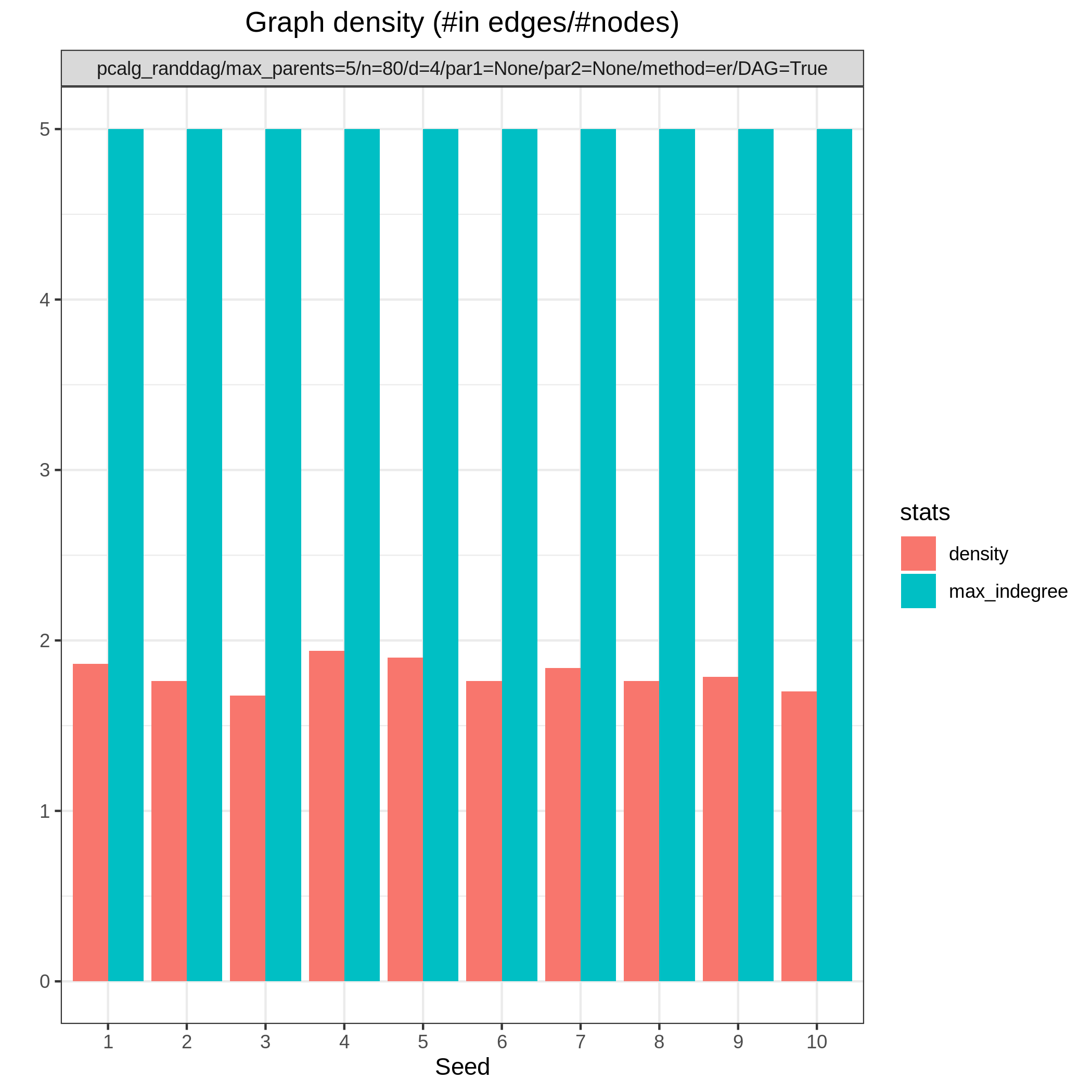

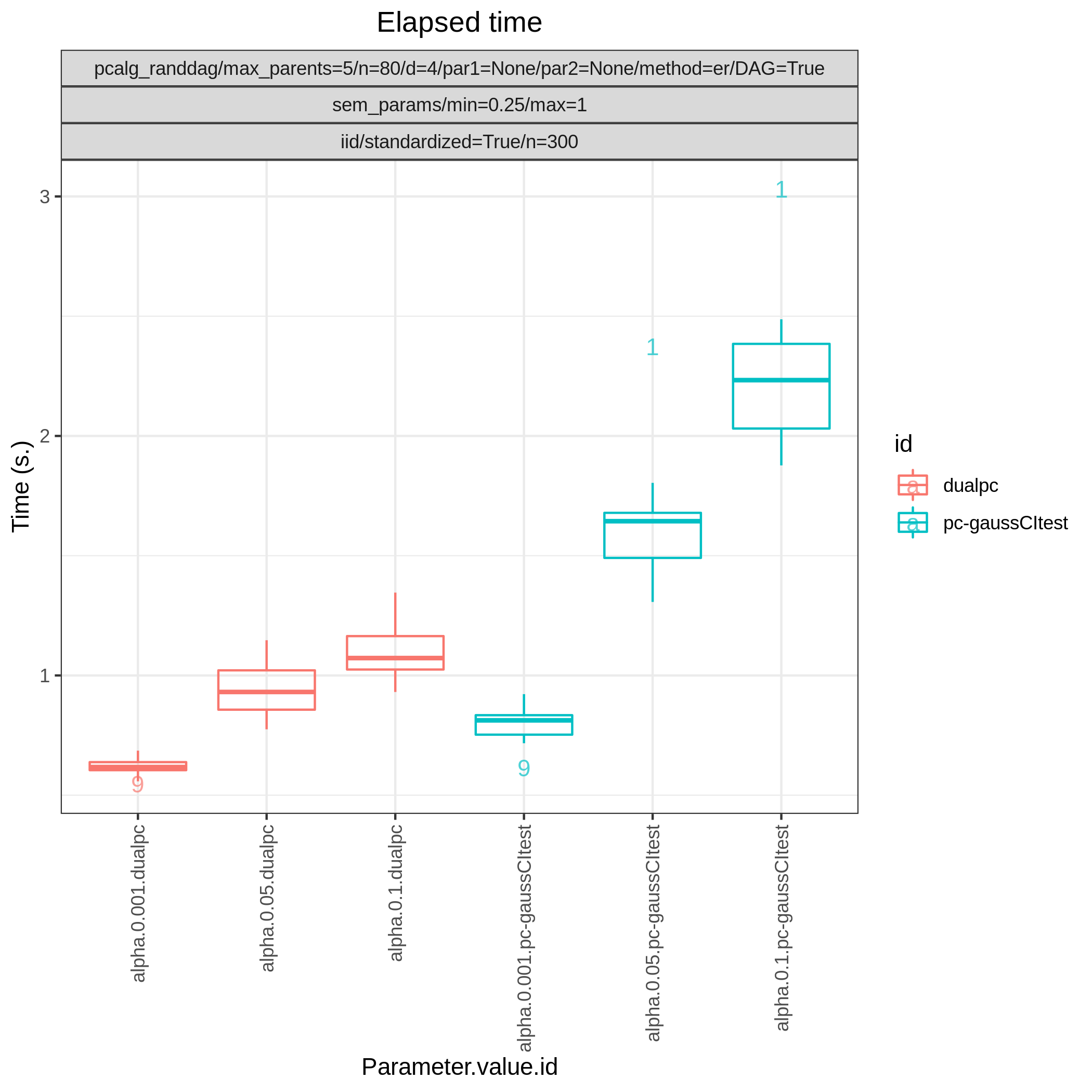

Results from the benchmarks and the graph_true_stats module, where we have focused on the undirected skeleton for evaluations since this is the part where the algorithms mainly differ. More specifically, from Fig. 1, showing the FP/P and TP/P, we see that the dual PC has superior performance for significance levels alpha=0.05,0.01. Apart from the curves, the numbers in the plot indicates the seed number of the underlying dataset and models for each run. We note that model with seed number 3 seems give to good results for both algorithms and looking into Fig. 2, we note that the graph with seed number 3 corresponds to the one with the lowest graph density \(|E| / |V|\). The box plots from Fig. 4 shows the computational times for the two algorithms, where the outliers are labeled by the model seed numbers. We note e.g., that seed number 1 gave a bit longer computational time for the standard PC algorithm and from Fig. 2 we find that the graph with seed number 1 has relatively high graph density. The conclusion of the F1 score plot in Fig. 3. are in line with the FP/P / TP/P results from Fig. 1.

Fig. 1 FP/P vs. TP/P.

Fig. 2 Graph density.

Fig. 3 F1.

Fig. 4 Timing.

Sachs et al. 2005 data

Config file: config/paper_sachs.json.

Command:

snakemake --cores all --use-singularity --configfile config/paper_sachs.json

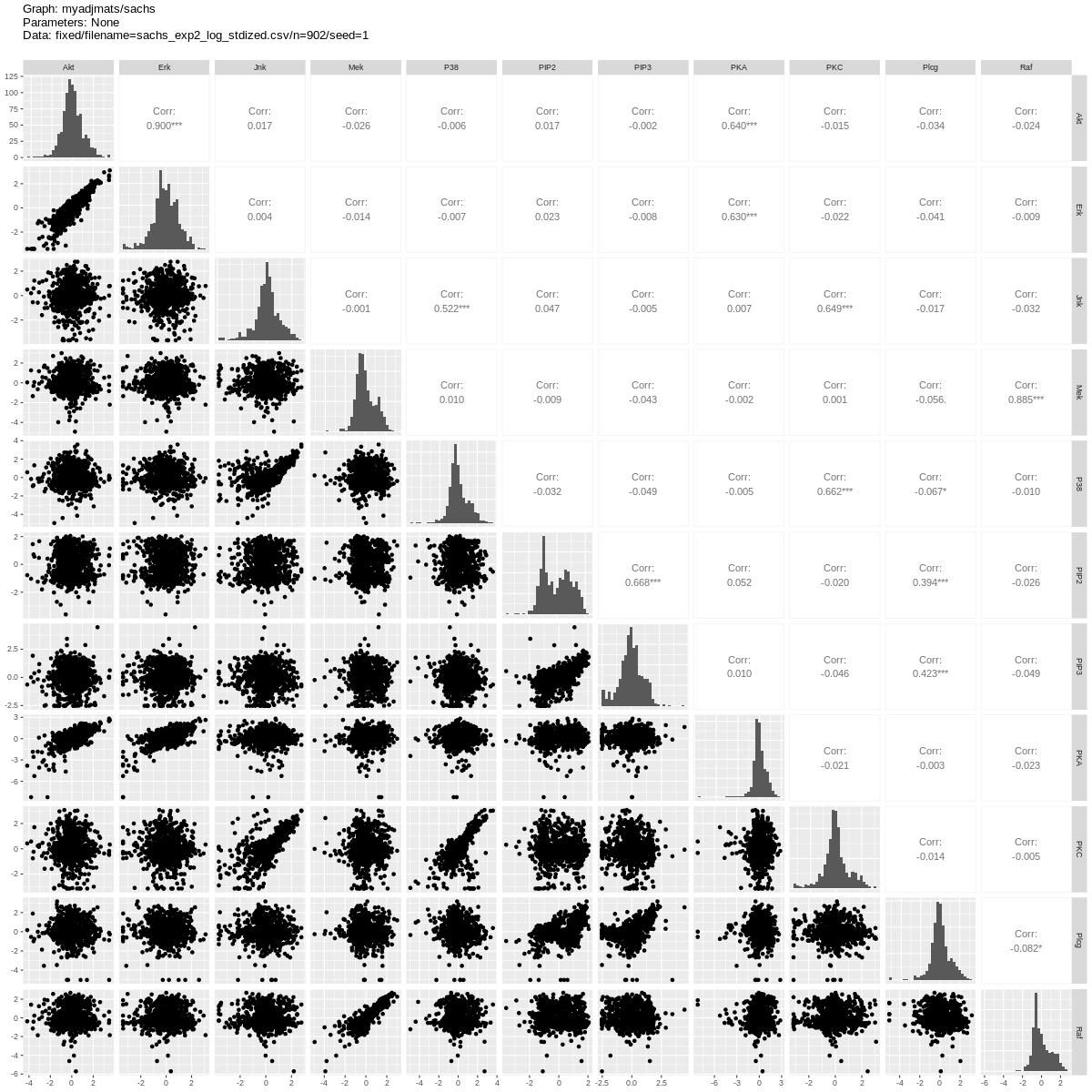

We show estimation results for the logged and standardized version (2005_sachs_2_cd3cd28icam2_log_std.csv) of the second dataset anti-CD3/CD28 + ICAM-2 from Sachs et al.[1] with 902 observations. The data is visualised in Fig. 5 with independent and pairwise scatter plots using the ggally_ggpairs module.

Fig. 5 Scatter plots.

Listing 3 shows the benchmark_setup section of the config file.

This setup falls into II) Data analysis with validation since the graph_id s set to the filename of a fixed graph.

1"benchmark_setup": {

2 "data": [

3 {

4 "graph_id": "sachs.csv",

5 "parameters_id": null,

6 "data_id": "2005_sachs_2_cd3cd28icam2_log_std.csv",

7 "seed_range": null

8 }

9 ],

10 "evaluation": {

11 "benchmarks": {

12 "filename_prefix": "paper_sachs/",

13 "show_seed": false,

14 "errorbar": false,

15 "errorbarh": false,

16 "scatter": true,

17 "path": true,

18 "text": false,

19 "ids": [

20 "gobnilp-bge",

21 "boss-sem-bic",

22 "grasp-sem-bic",

23 "notears-l2",

24 "fges-sem-bic",

25 "hc-bge",

26 "itsearch-bge",

27 "mmhc-bge-zf",

28 "omcmc-bge",

29 "pc-gaussCItest",

30 "tabu-bge"

31 ]

32 },

33 "graph_true_stats": true,

34 "graph_true_plots": true,

35 "ggally_ggpairs": true,

36 "graph_plots": [

37 "gobnilp-bge",

38 "boss-sem-bic",

39 "grasp-sem-bic",

40 "notears-l2",

41 "fges-sem-bic",

42 "hc-bge",

43 "itsearch-bge",

44 "mmhc-bge-zf",

45 "omcmc-bge",

46 "pc-gaussCItest",

47 "tabu-bge"

48 ],

49 "mcmc_traj_plots": [],

50 "mcmc_heatmaps": [],

51 "mcmc_autocorr_plots": []

52 }

53}

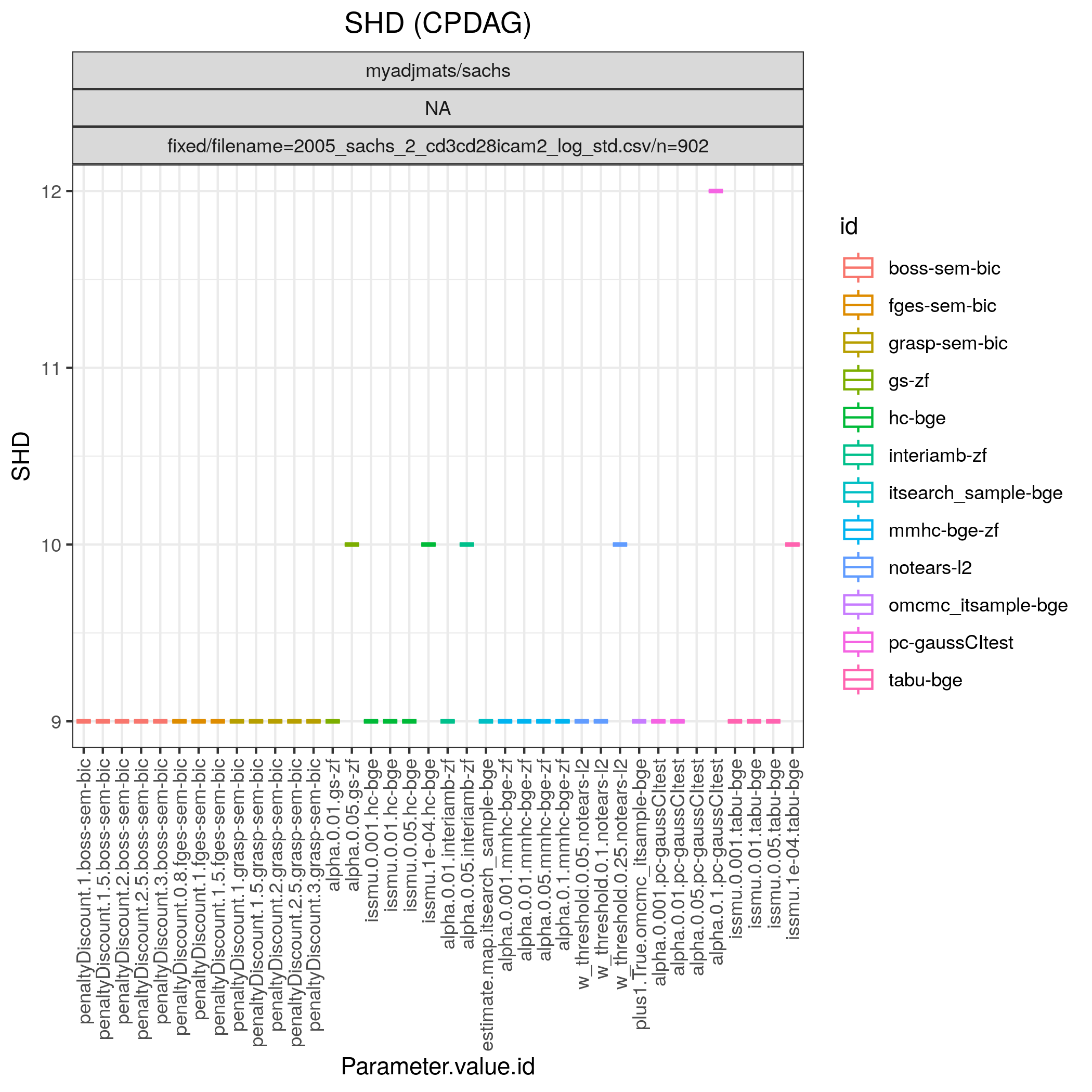

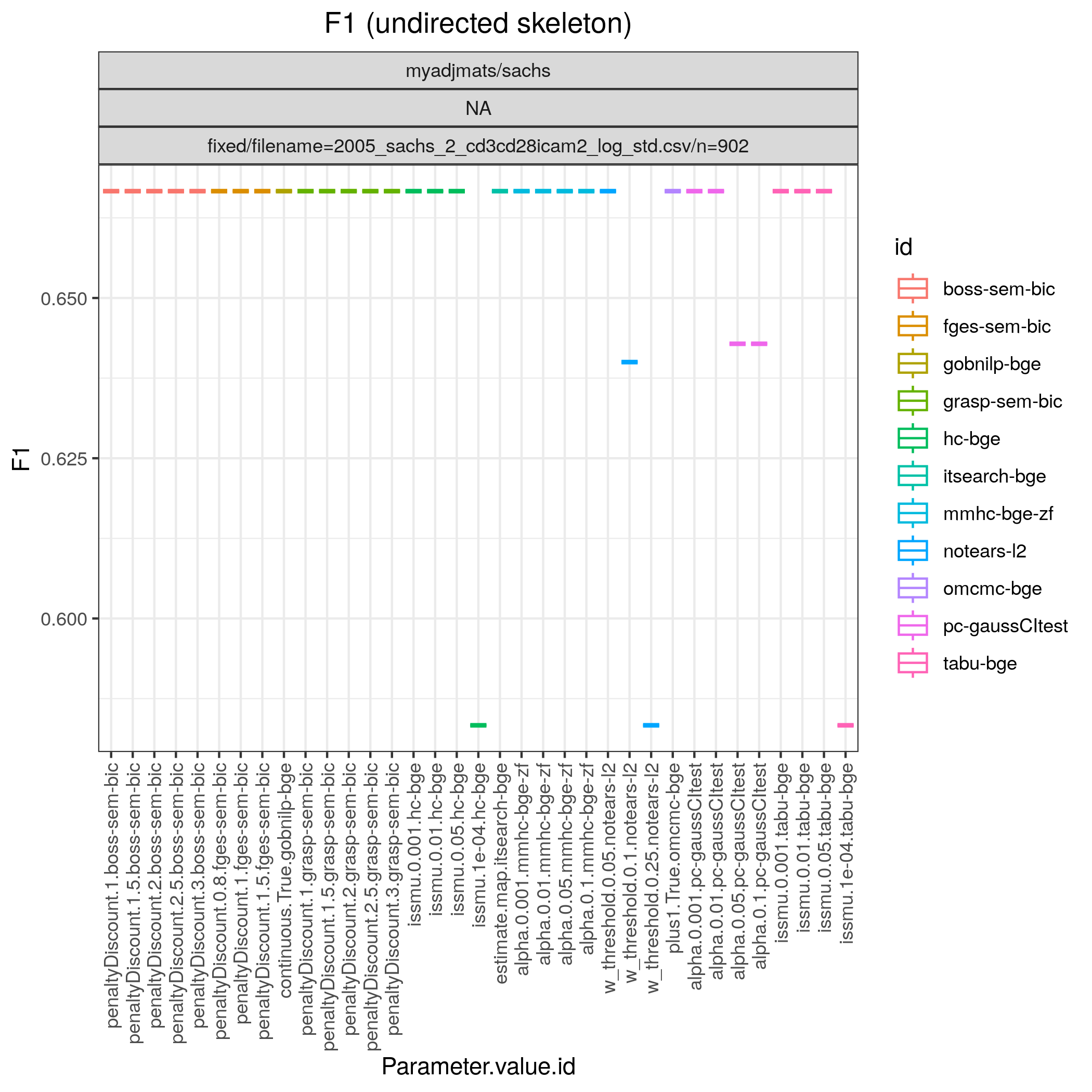

Fig. 6 shows Hamming distance between the edge sets of the true and the estimated CPDAGs (SHD) and the F1 score based on the undirected skeleton from 10 algorithms with different parametrisations, produced by the benchmarks module. From this figure we can directly conclude that all algorithms have a parametrisation that gives the minimal SHD of 9 and maximal F1 score of 0.67.

Fig. 6 SHD.

Fig. 7 F1.

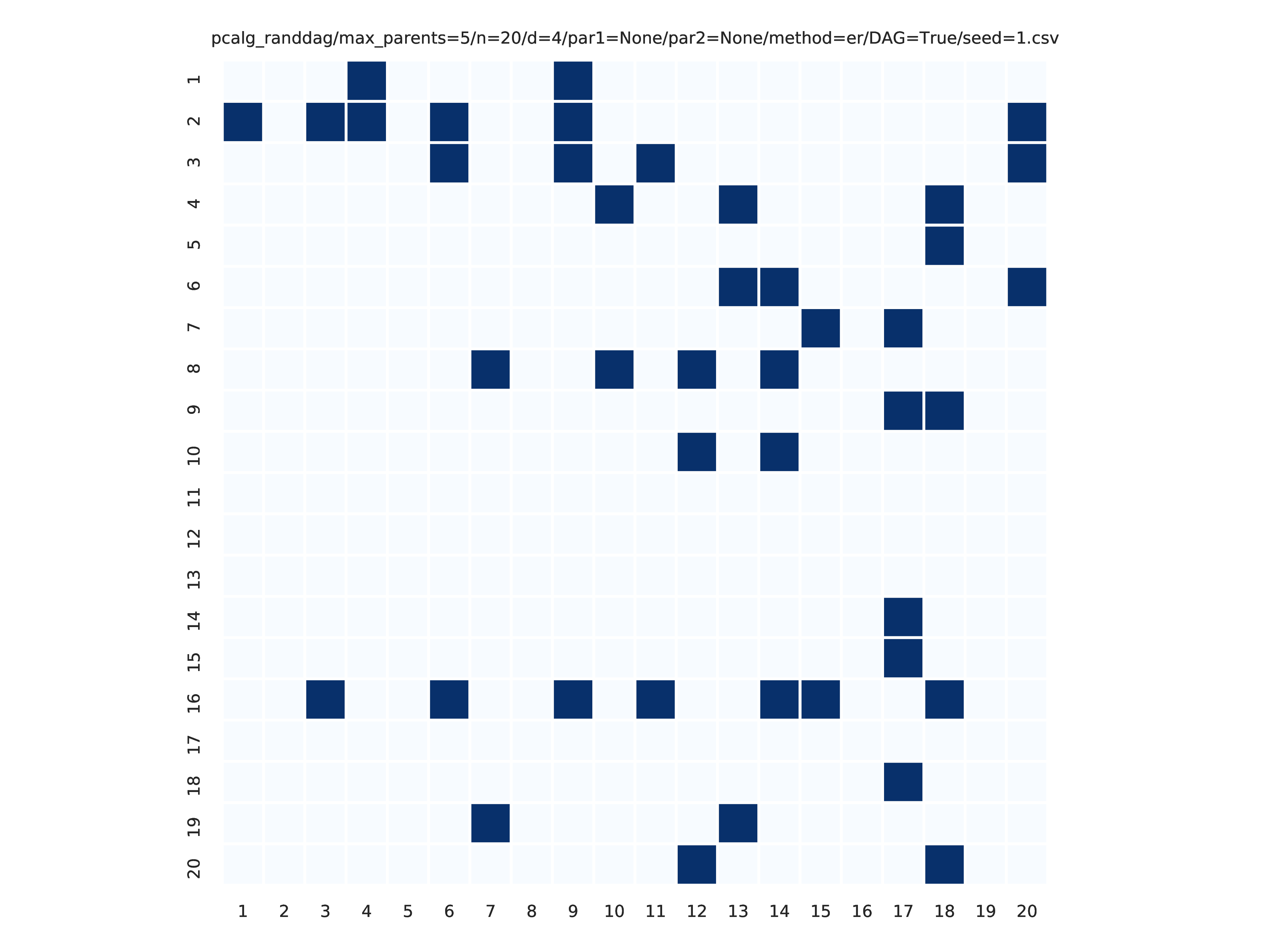

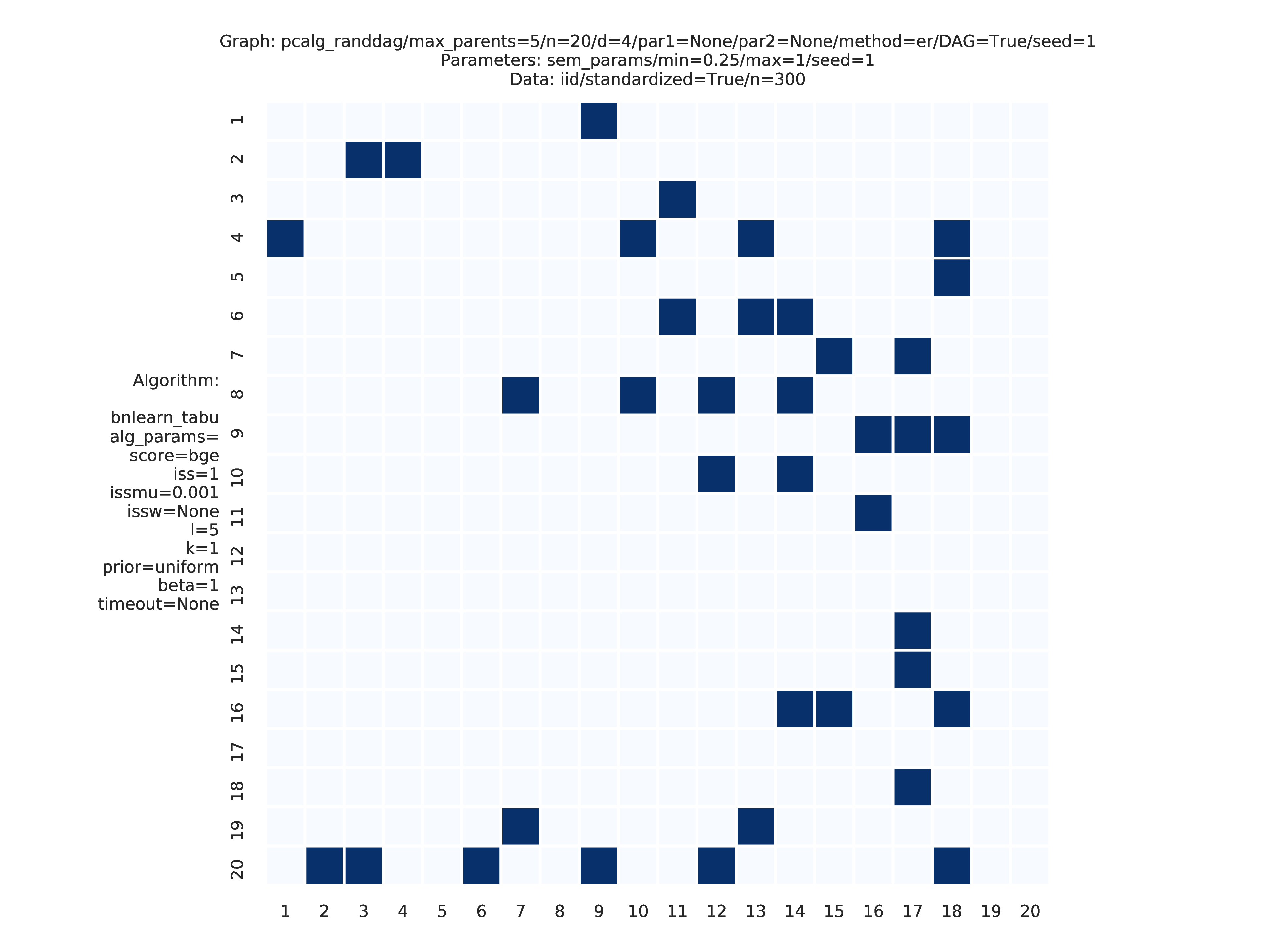

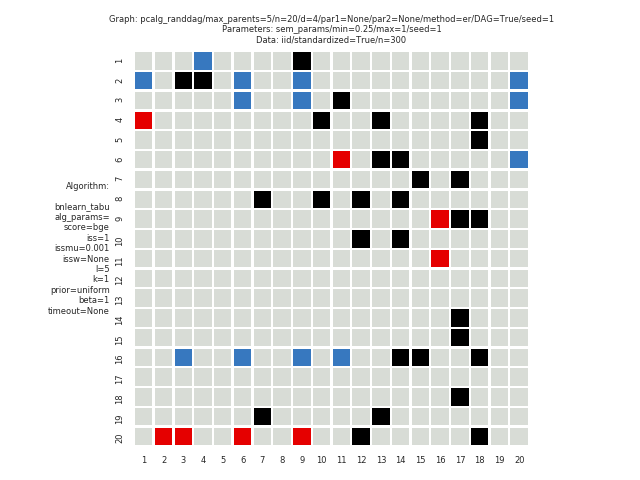

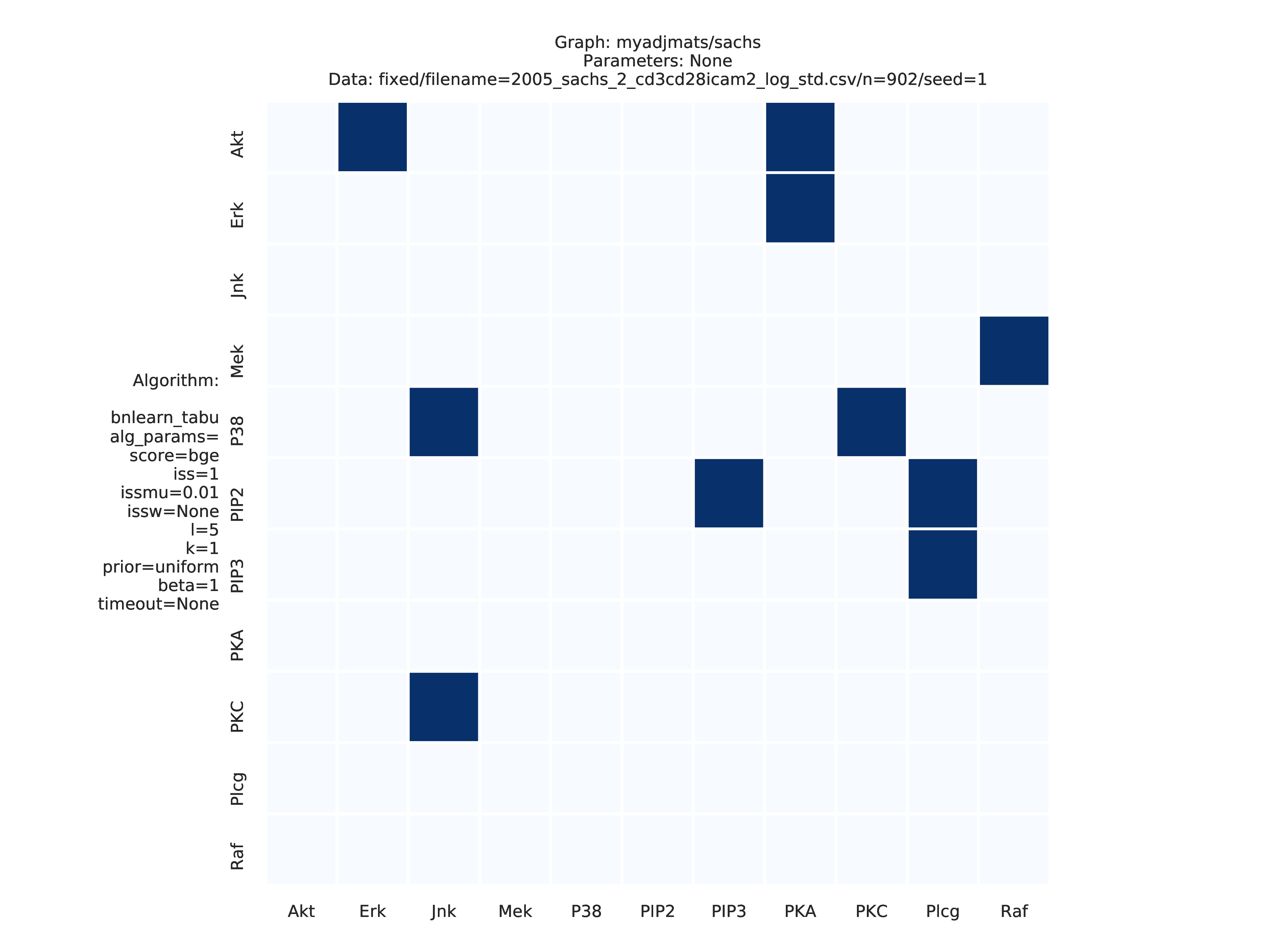

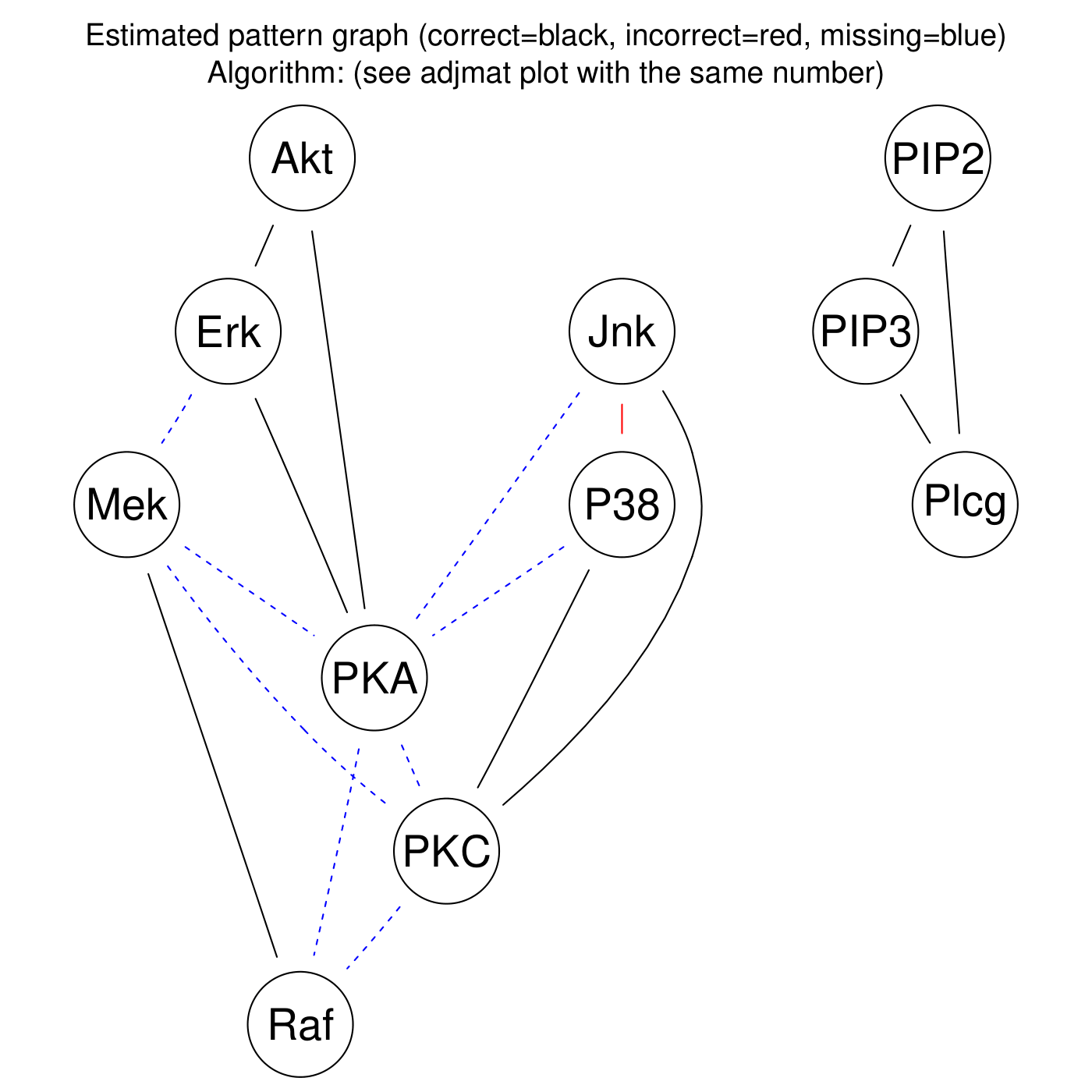

Fig. 8 shows the adjacency matrix produced by the graph_plots module of the DAG estimated by the bnlearn_tabu module.

Fig. 8 Estimated adjmat.

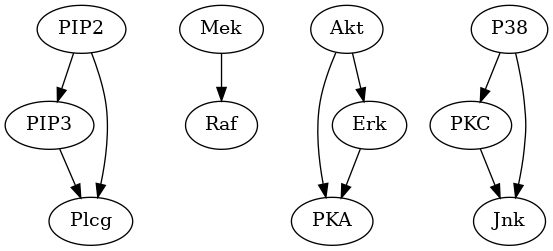

Fig. 9 Estimated graph.

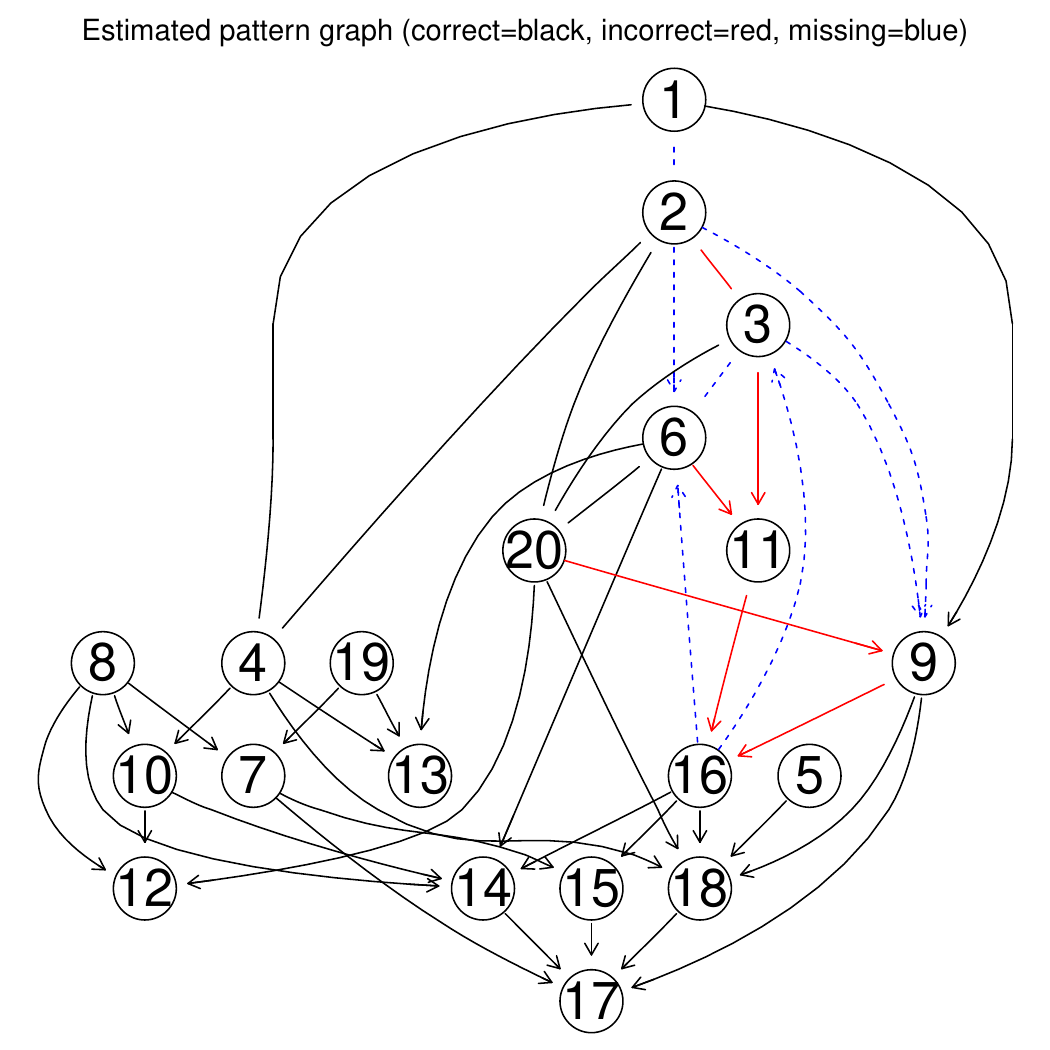

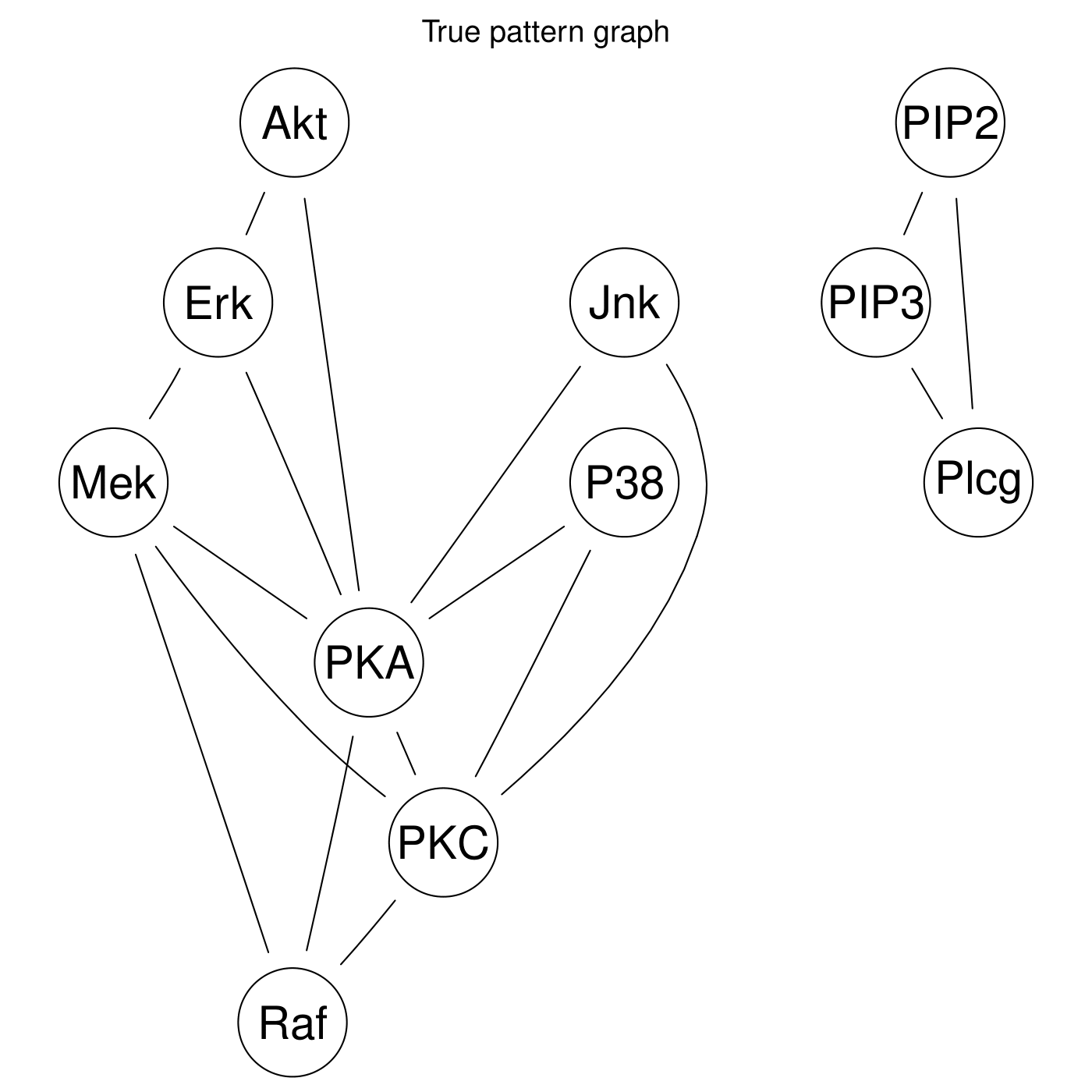

Fig. 10 and Fig. 11 shows the pattern graph of both the true and a DAG estimated by the bnlearn_tabu module, where the black edges are correct in both subfigures. The missing and incorrect edges are colored in blue and red respectively in Fig. 11.

Fig. 10 True pattern graph.

Fig. 11 Diff pattern graph.

References

Random Gaussian SEM (small study)

Config file: config/paper_er_sem_small.json.

Command:

snakemake --cores all --use-singularity --configfile config/paper_er_sem_small.json

Approximate time: 40 min. on a 3.1 GHz Dual-Core Intel Core i5.

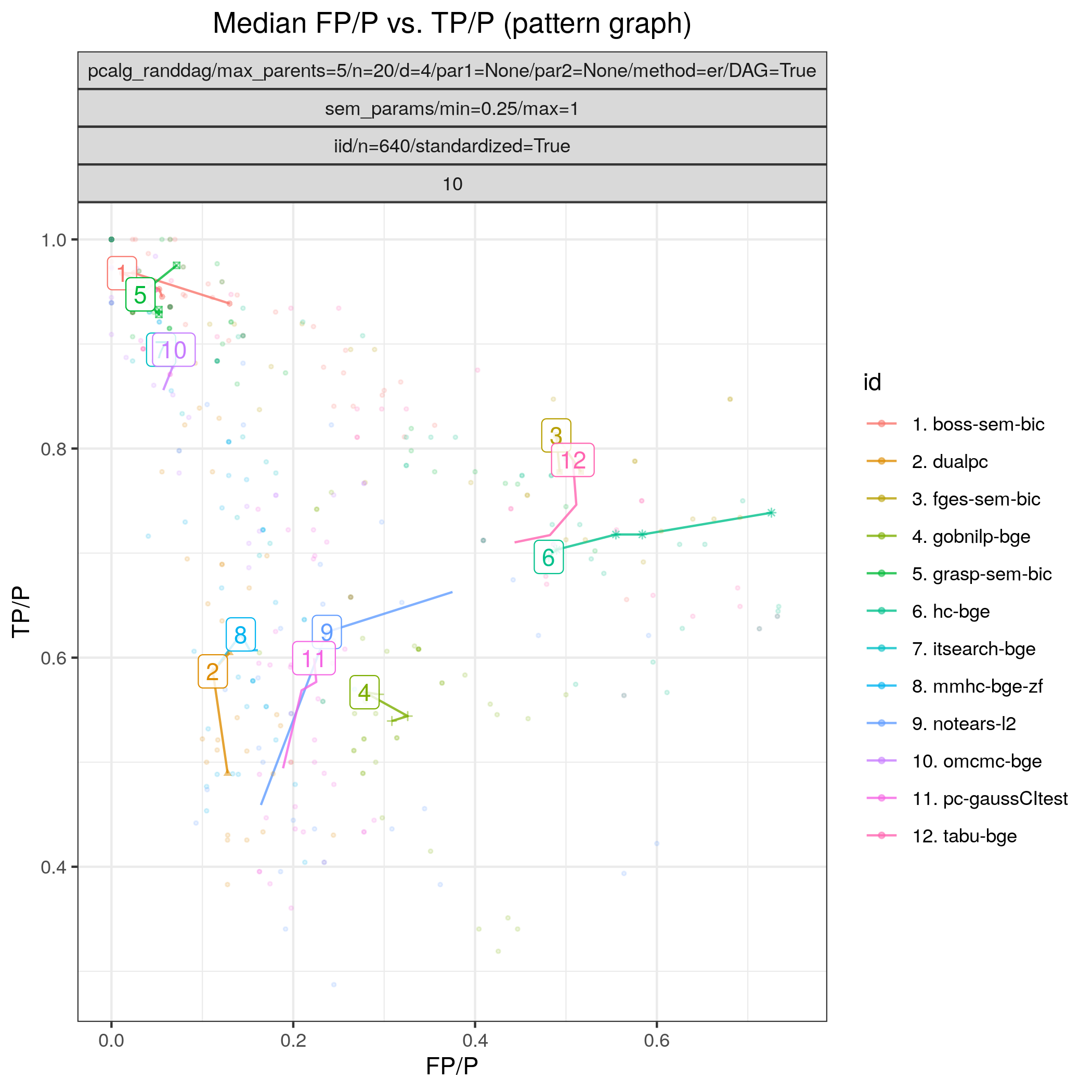

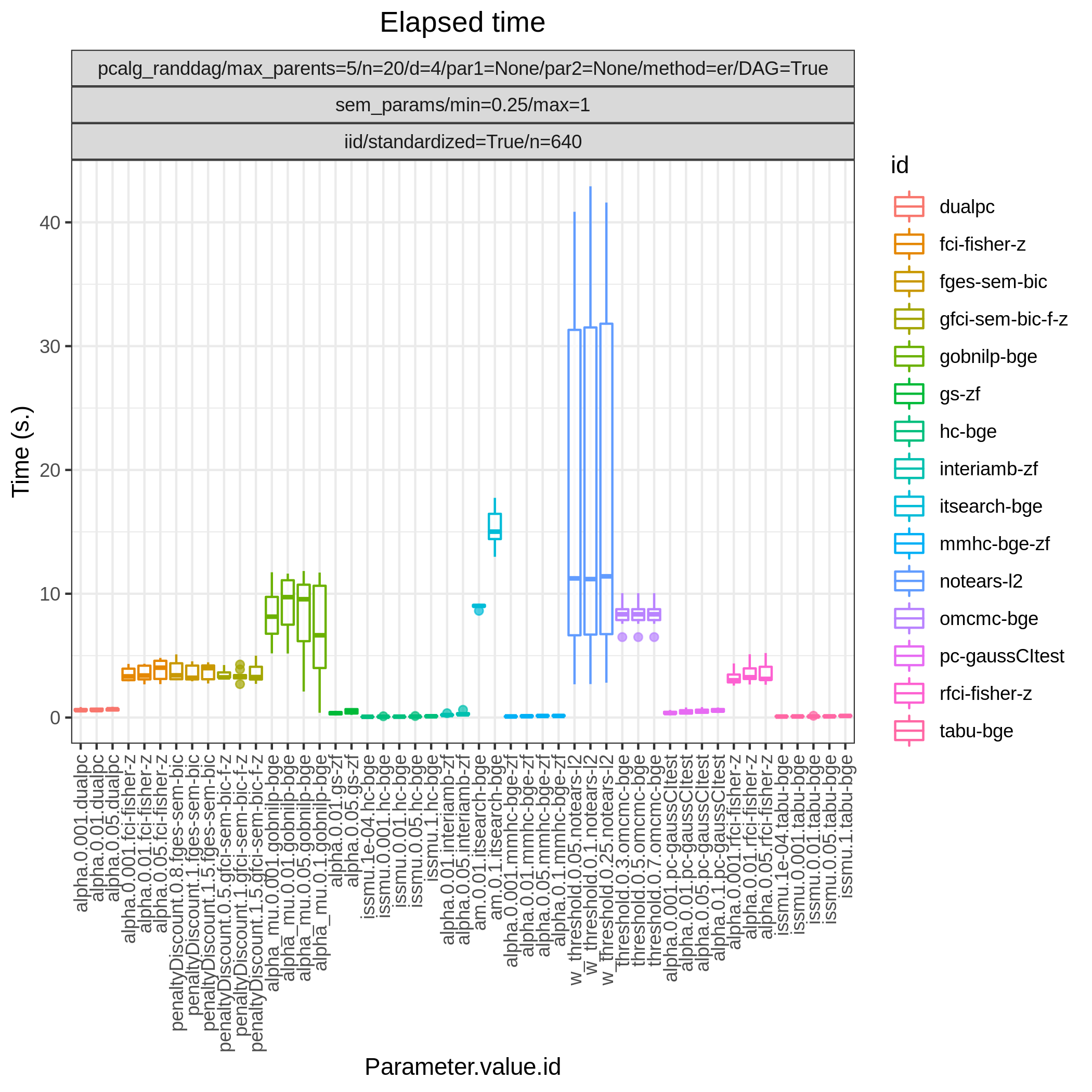

In the present study we consider a broader simulation over 13 algorithms in a similar Gaussian data setting as in PC vs. dual PC, with the only difference that the number of nodes is reduced to 20 and the number of seeds is increased to 10.

Fig. 12 FP/P vs. TP/P.

Fig. 13 Timing.

Fig. 12 shows FP/P / TP/P based on pattern graphs and Fig. 13 shows the computational times. tetrad_boss (boss-sem-bic), tetrad_grasp (grasp-sem-bic), bidag_itsearch, (itsearch-bge), and bidag_order_mcmc (omcmc-bge) have the best (near perfect) performance.

Random binary Bayesian network

Config file: config/paper_er_bin.json.

Command:

snakemake --cores all --use-singularity --configfile config/paper_er_bin.json

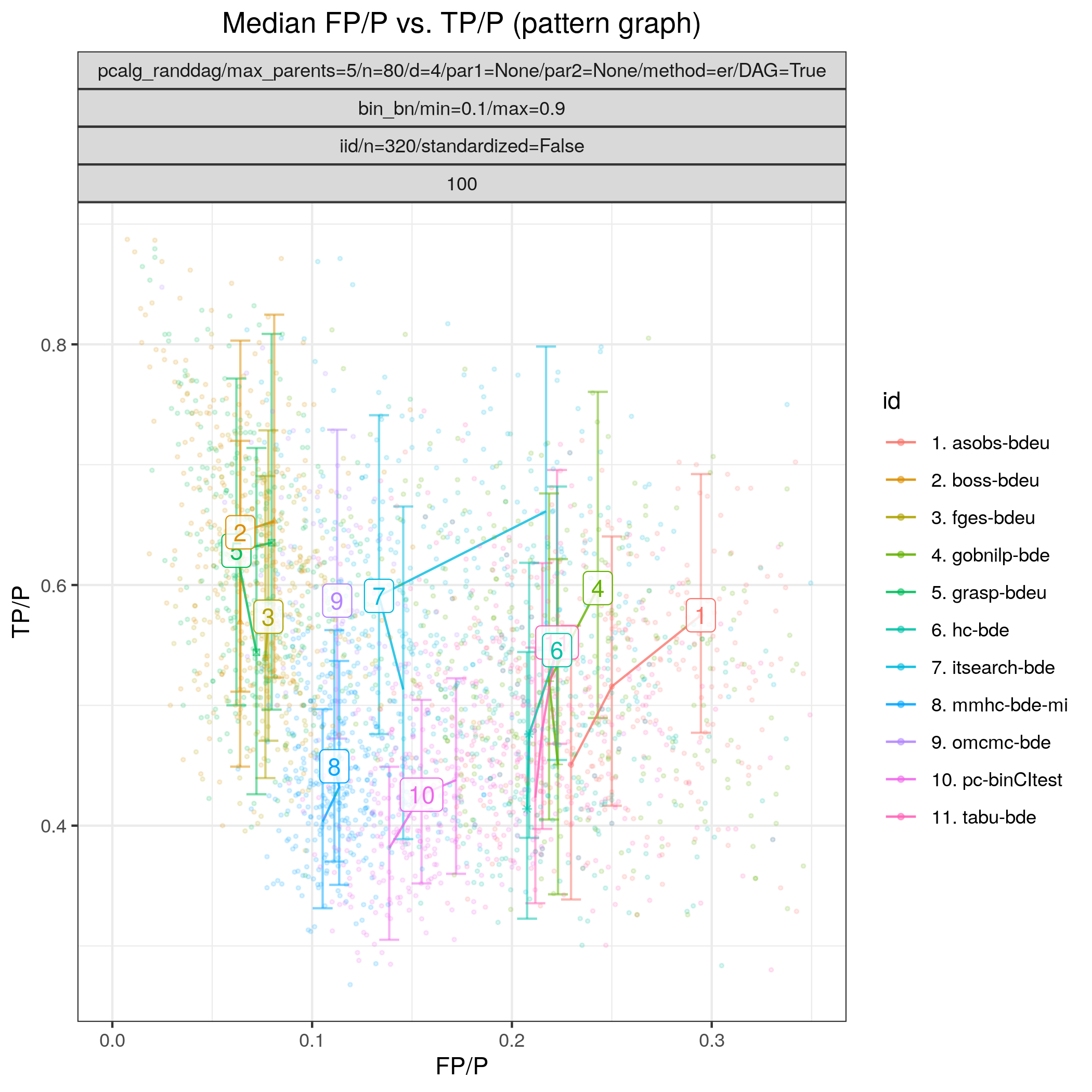

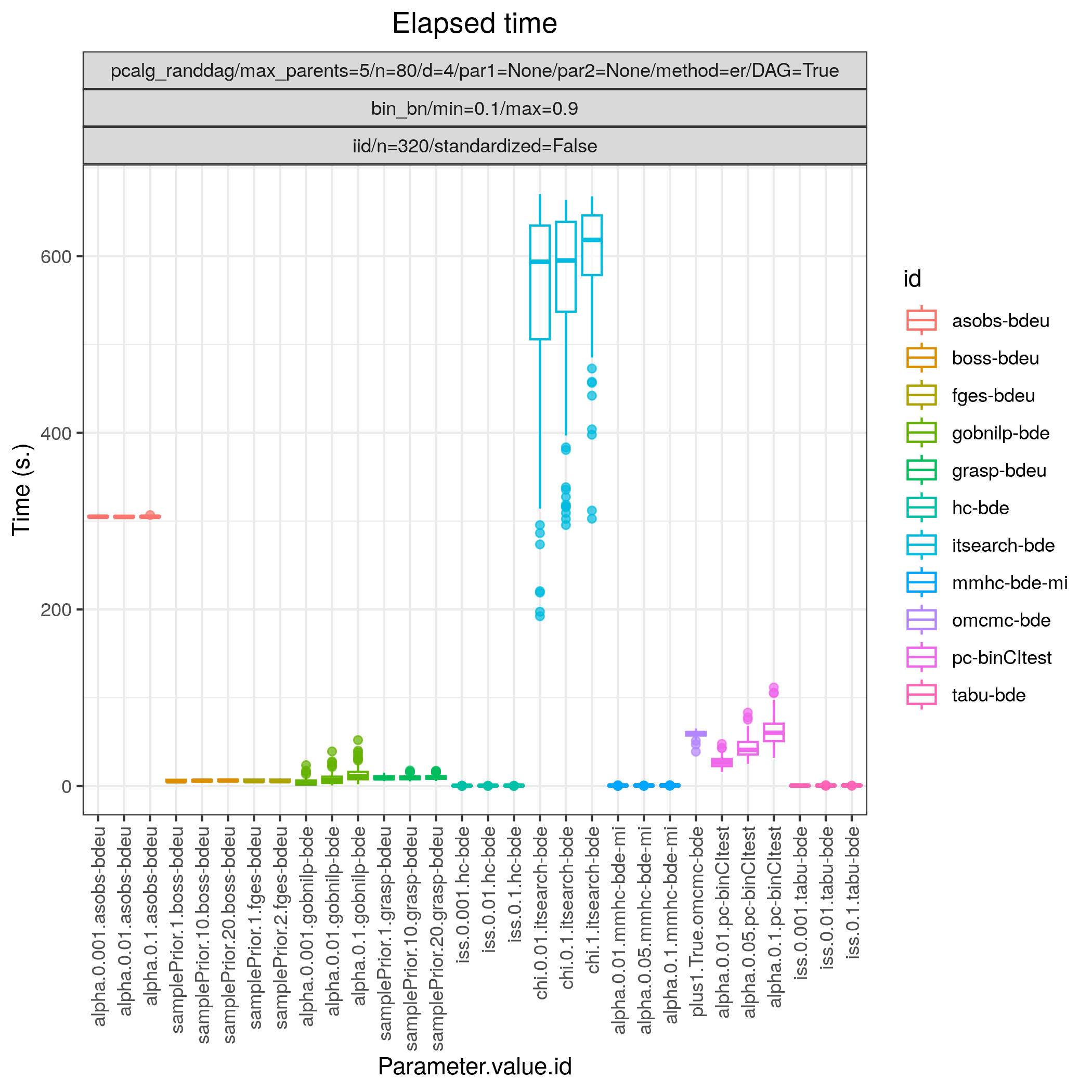

In this example we study a binary valued Bayesian network, where both the graph \(G\) and the parameters \(\Theta\) are regarded as random variables. More specifically, we consider 100 models \(\{(G_i,\Theta_i)\}_{i=1}^{100}\), where each \(G_i\) is sampled according to the Erdős–Rényi random DAG model using the pcalg_randdag module, where the number of nodes is p=80 (p is called n in this module), the average number of neighbours (parents) per node is 4 (2) and the maximal number of parents per node is 5.

"pcalg_randdag": [

{

"id": "avneigs4",

"max_parents": 5,

"n": 80,

"d": 4,

"par1": null,

"par2": null,

"method": "er",

"DAG": true

}

]

The parameters \(\Theta_i\) are sampled using the bin_bn module and restricting the conditional probabilities within the range [0.1, 0.9].

"bin_bn": [

{

"id": "binbn",

"min": 0.1,

"max": 0.9

}

]

From each model, we sample a dataset \(\mathbf Y_i\) of size n=320 and using the iid module.

"iid": [

{

"id": "example3",

"standardized": false,

"n": 320

}

]

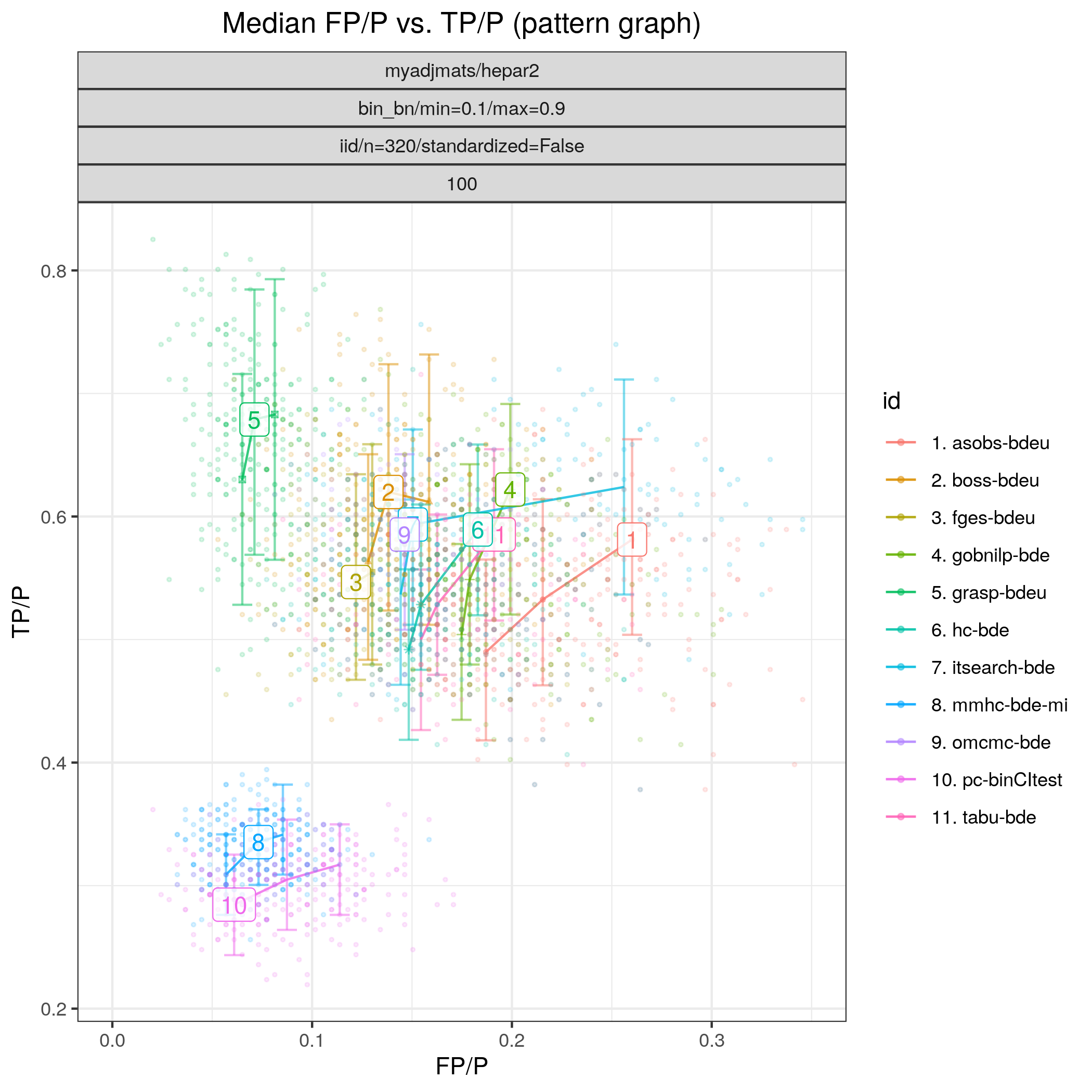

Fig. 14 shows the ROC type curves for the algorithms considered for the discrete data as described above. The algorithms standing out in terms of low SHD in combination with low best median FP/P (< 0.12) and higher best median TPR (>0.5), are tetrad_boss (boss-bdeu), tetrad_grasp (grasp-bdeu), tetrad_fges (fges-bdeu), and bidag_order_mcmc (omcmc-bde).

Fig. 14 FP/P vs. TP/P.

Fig. 15 Timing.

Random Gaussian SEM

Config file: config/paper_er_sem.json.

Command:

snakemake --cores all --use-singularity --configfile config/paper_er_sem.json

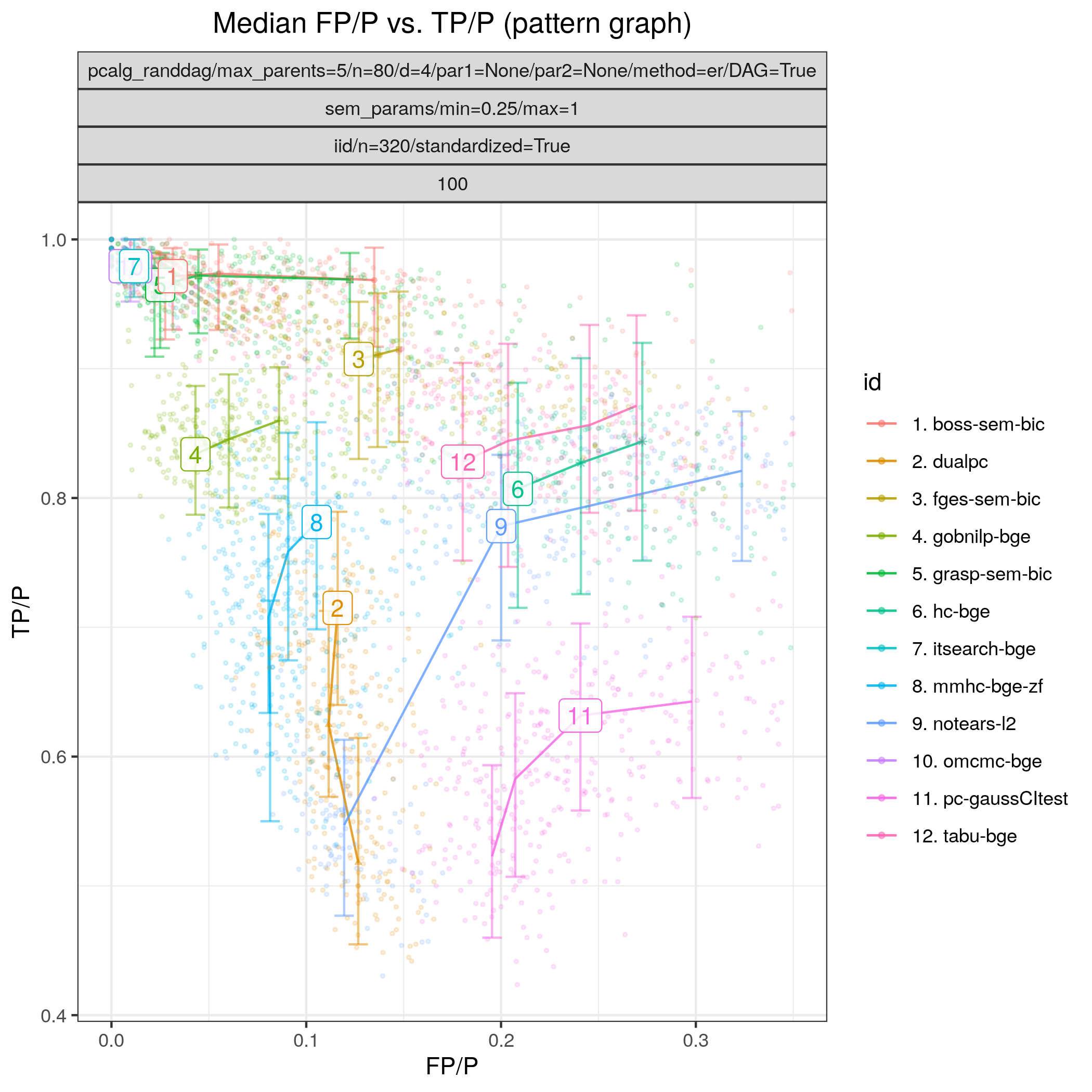

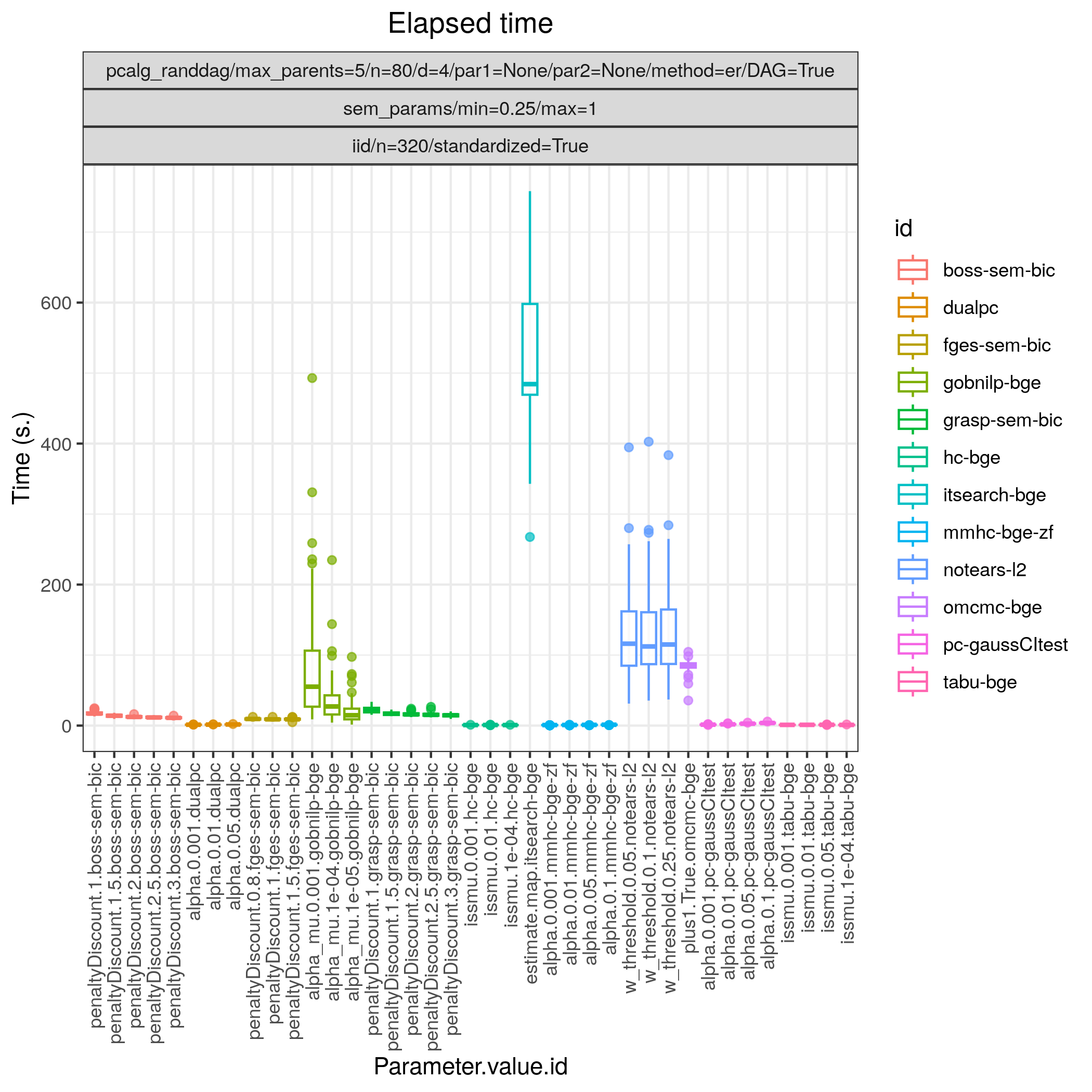

In this example we again study a linear Gaussian random Bayesian networks, of size p=80 and with 100 repetitions \(\{(G_i,\Theta_i)\}_{i=1}^{100}\). As in Random Gaussian SEM (small study), we draw a standardised dataset \(\mathbf Y_i\) of size n=320 from each of the models using the iid module.

Fig. 16 shows results for all the algorithms considered for continuous data as described above. In terms of achieving high TP/P (>0.95) bidag_order_mcmc (omcmc_itsample-bge) and bidag_itsearch (itsearch-bge), tetrad_boss (boss-sem-bic), and tetrad_grasp (grasp-sem-bic) stand out with near perfect performance, i.e., SHD \(\approx 0\). Among the other algorithms gobnilp (gobnilp-bge) performs next best for both sample sizes with TP/F \(\approx 0.85\) and FP/P \(\approx 0.05\), followed by tetrad_fges (fges-sem-bic).

Fig. 16 FP/P vs. TP/P.

Fig. 17 Timing.

Random binary HEPAR II network

Config file: config/paper_hepar2_bin.json.

Command:

snakemake --cores all --use-singularity --configfile config/paper_hepar2_bin.json

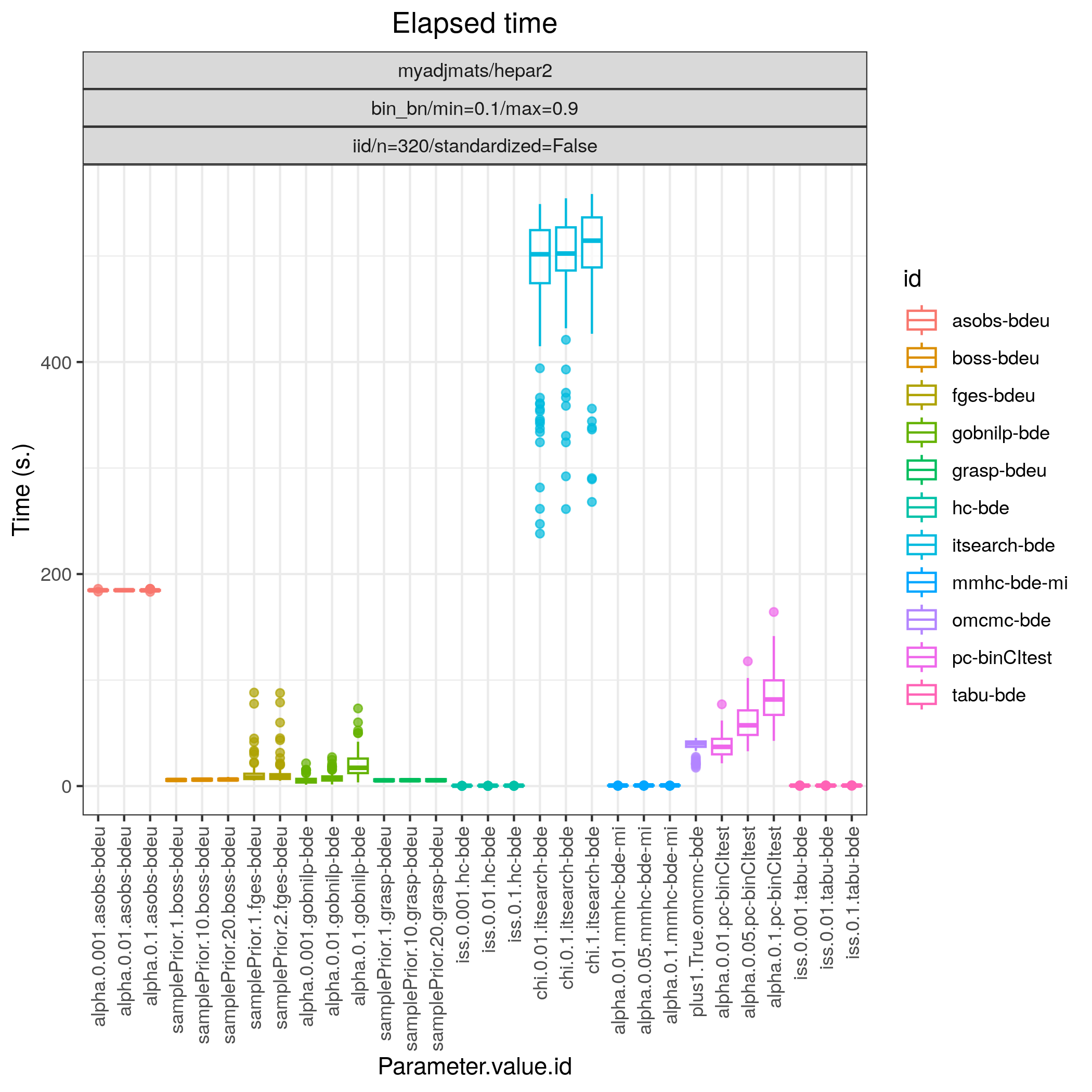

In this example we study a random binary Bayesian network where the graph \(G\) is fixed and the associated parameters \(\Theta\) are regarded as random. More specifically, we consider 100 models \(\{(G_i,\Theta_i)\}_{i=1}^{100}\), where the graph structure \(G\) is that of the well known Bayesian network HEPAR II (hepar2.csv). This graph has 70 nodes and 123 edges and has become one of the standard benchmarks for evaluating the performance of structure learning algorithms. The maximum number of parents per node is 6 and we sample the parameters \(\Theta_i\) using the bin_bn module, in the same manner as described Random binary Bayesian network. From each model \((G_i,\Theta_i)\) we draw a dataset \(\mathbf Y_i\) of size n=320, using the iid module.

Fig. 18 shows the ROC curves for this scenario. The best performing algorithm is clearly tetrad_grasp (grasp-sem-bic), followed by tetrad_boss (boss-sem-bic), tetrad_fges (fges-bdeu), bidag_order_mcmc (omcmc_itsample-bde), bidag_itsearch (itsearch_sample-bde), all with FP/P \(\approx 0.15.\) The constraint based algorithm pcalg_pc (pc-binCItest) and bnlearn_mmhc (mmhc-bde-mi) appear to cluster in the lower scoring region (TP/P <0.4).

Fig. 18 FP/P vs. TP/P.

Fig. 19 Timing.

Random Gaussian HEPAR II network

Config file: config/paper_hepar2_sem.json.

Command:

snakemake --cores all --use-singularity --configfile config/paper_hepar2_sem.json

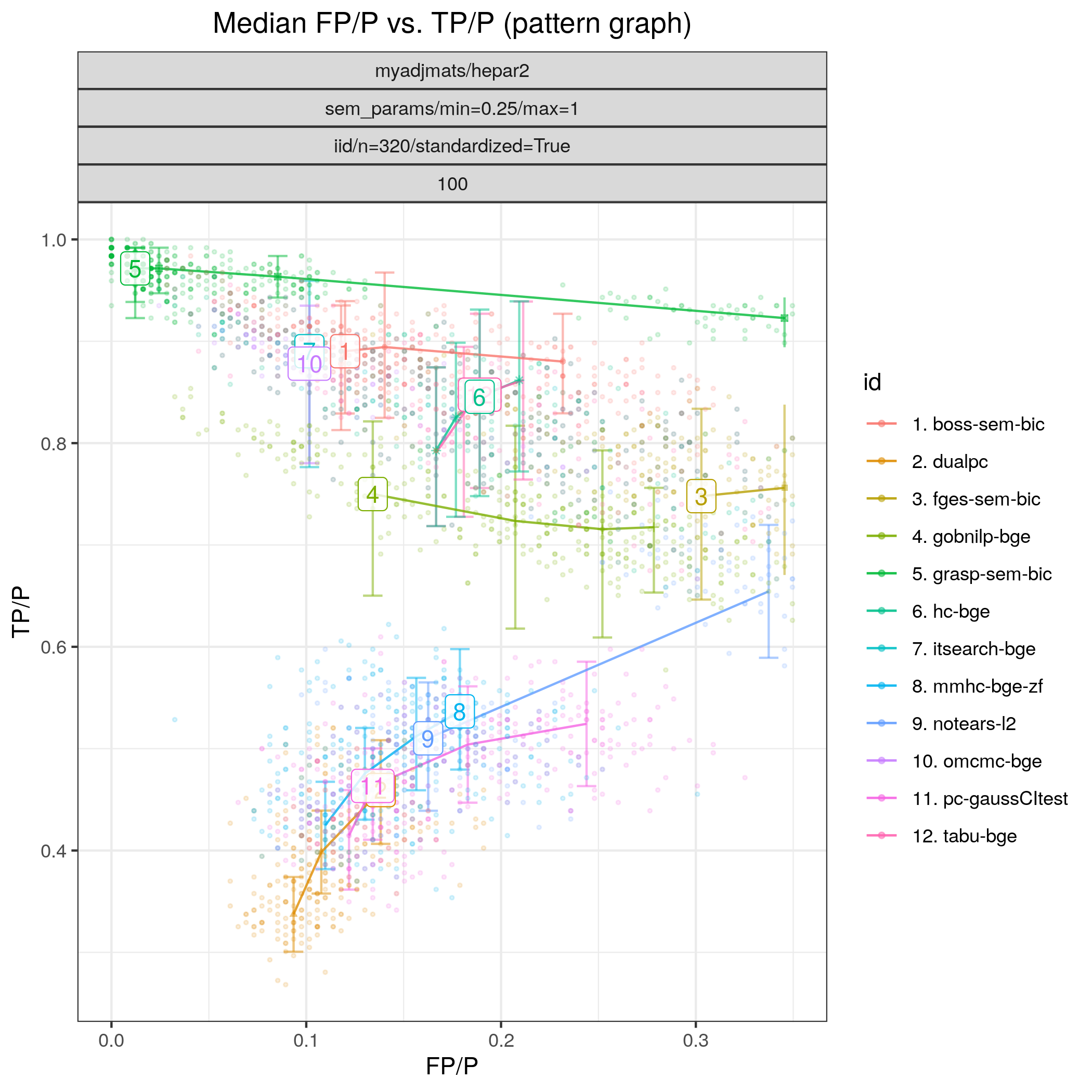

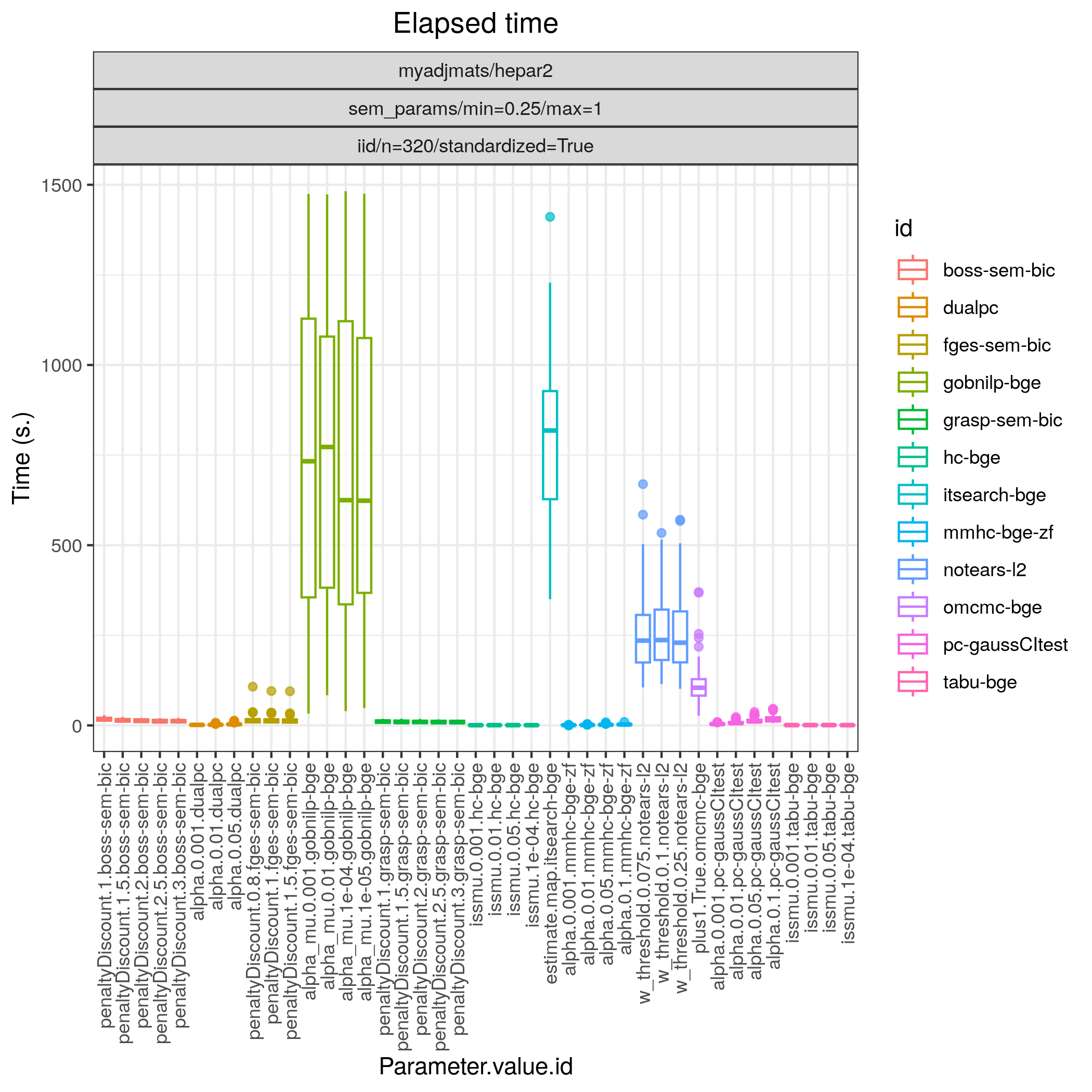

In this example we draw again 100 models \(\{(G_i,\Theta_i)\}_{i=1}^{100}\), where \(G\) corresponds to the HEPAR II network (hepar2.csv), and \(\Theta_i\) are the parameters of a linear Gaussian SEM sampled using the sem_params, module with the same settings as in Random binary Bayesian network. From each model \((G,\Theta_i)\), we draw a standardised data set \(\mathbf Y_i\) of size n=320, using the iid module.

The results of Fig. 20 highlight that tetrad_grasp (grasp-bdeu) has nearly perfect performande.:abbr: Also tetrad_boss (boss-bdeu), bidag_order_mcmc (omcmc_itsample-bdeu), bidag_itsearch (itsearch_sample-bge) separate themselves from the rest in terms of SHD (combining both low FP/P and high TP/P) for both sample sizes.

Fig. 20 FP/P vs. TP/P.

Fig. 21 Timing.